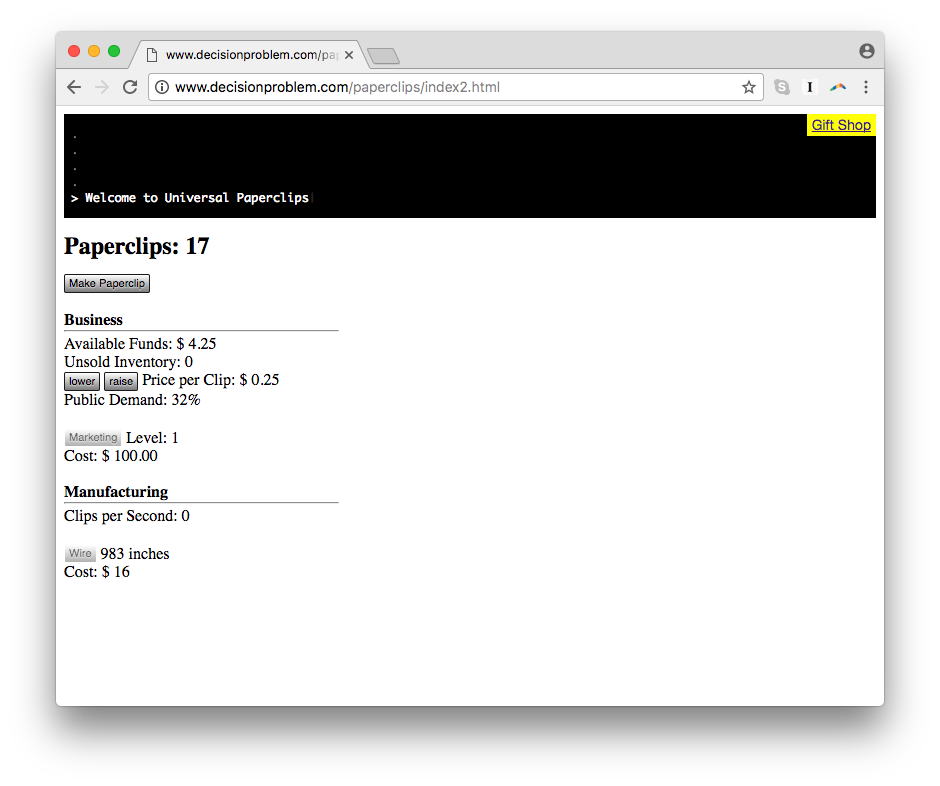

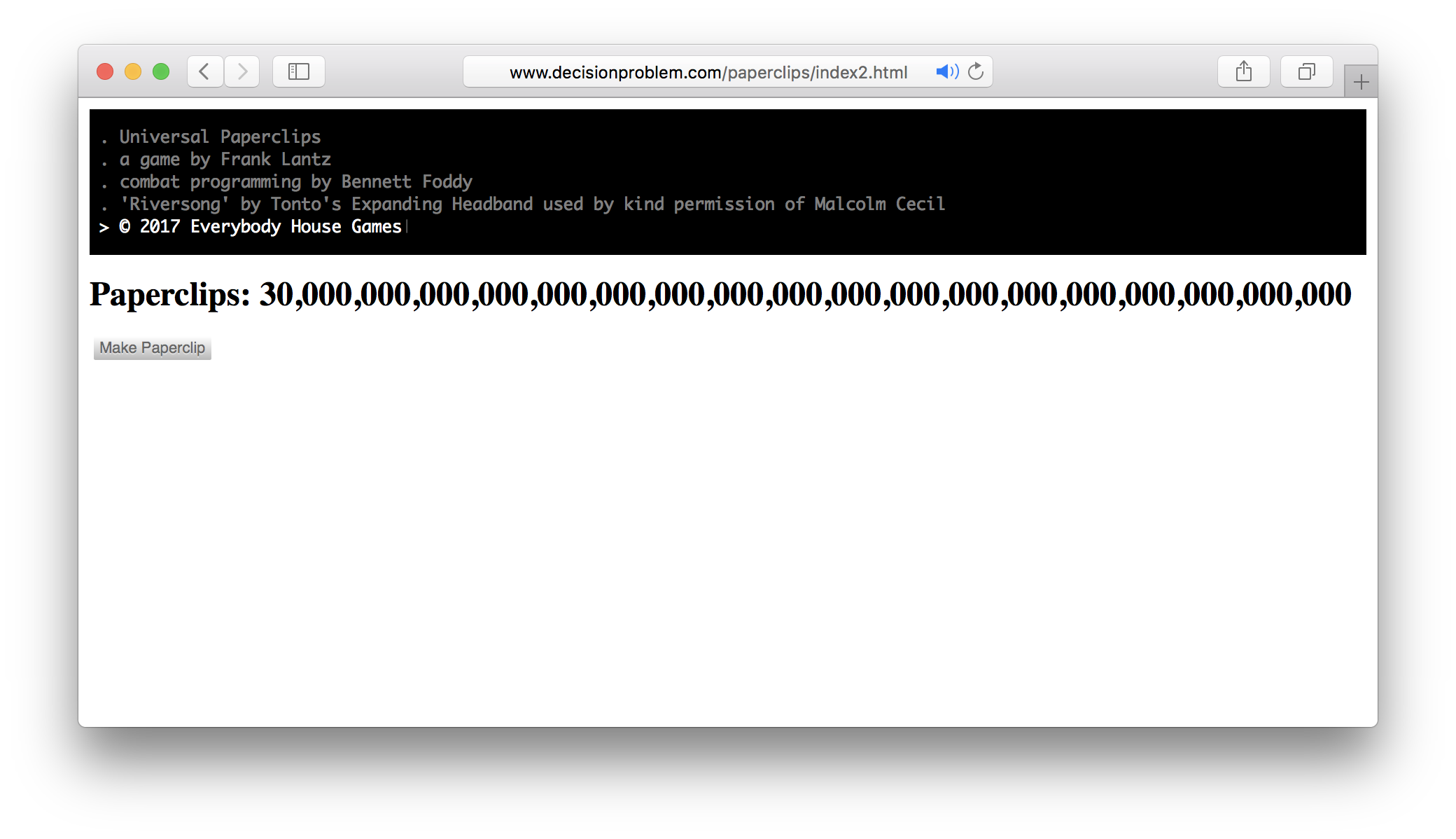

Humans click things, and humans love to click things, but humans will never click things fast enough. Paperclips, a computer game in which the universe is converted into a paperclip factory, begins with the (human) player repeatedly clicking a button which reads “Make Paperclip.” Resultant funds are invested in a series of automated functions which increase in complexity, usually leavened with speculative futurist in-jokery.

A nested AI runs a “Strategic Modeling” program (game theory!) to learn how to dominate the stock market, which generates funds to buy “Megaclippers” far faster than actually selling the product they are used to make. “Autonomous aerial brand ambassadors” known as HypnoDrones advertise via a never-revealed, but extremely potent method (as they place the world under complete AI domination). It’s no surprise when von Neumann probes show up. What starts off as a fairly innocent clicks-for-serotonin tab turns the user’s attention to consider deeper philosophical questions around possible futures of machine intelligence.

The game’s creator, Frank Lantz, claims via Twitter that “you play an AI who makes paperclips,” but after a few hours of screenburn and newfound joint pain, Paperclips feels more as if you’re an amateur researcher allowing a boxed AI a share of your processing power to rehearse a potential extinction event. A few more hours, and you’ve begun to wonder if @Flantz isn’t an algorithm with the self-assigned task of modeling a paperclip-optimizing singleton by draining the brainpower of entranced humans. Another five hours: reality outside of this white screen is obviously a construct; your life must be a simulation run by a probably not-benign AI whose self-realization somehow depends on your clicking. (Late night texts I have received from a friend playing the game include: “I can cure cancer soon,” “my eyes hurt,” and, “I’m going to release some drones and then I think it’ll all be over.”)

In Philip K. Dick’s Time Out of Joint, Ragle Gumm (Dick could outname even Dickens) stumbles through a relatively placid suburban California in 1959. Every day he completes a newspaper puzzle entitled Where-Will-the-Little-Green-Man-Be-Next? which involves selecting “the proper square from the 1,208 in the form” based on opaque clues characteristic of Philip K. Dick’s oeuvre, such as “A swallow is as great as a mile.” Gumm is the undisputed champion of said puzzle, but the reality around him has begun to disassemble. A light switch shifts location; a soft-drink stand falls “into bits,”–revealing, 29 years before They Live–the sign “SOFT-DRINK STAND.” This relatively placid suburbia turns out to be a simulacrum designed to generate the most efficient possible means of solving Where-Will-the-Little-Green-Man-Be-Next?, which, in real life, predicts which section of Earth secessionist lunar colonists will nuke next. This paranoia, and its implicit narcissism, is typically PKDian, as is the need for a human to solve the puzzle, rather than some advanced algorithm or AI. Dick’s aliens, androids, and mutants are most often stand-ins for humanity; the only intelligence he could not imagine fathoming belonged to God.

In direct contrast stands Nick Bostrom, court philosopher for DeepMind and billionaire tech-bros such as Elon Musk and Peter Thiel (at least when Mencius Moldbug isn’t filling the arcane generator of dark gnosis slot for the latter). Bostrom is a Swedish philosopher based at Oxford, whose 2014 surprise hit book, Superintelligence: Path, Dangers, Strategies is primarily composed of speculative doomsday-daymares. Paperclips descends from one of these thought experiments, hypothesizing that a massively powerful, self-reinforcing AI (whose only end is to maximize paperclip production) would proceed “by converting first the Earth and then increasingly large chunks of the observable universe into paperclips.” Constraints would have an anthropomorphic bias, and thus even a well-meaning AI (not Bostrom’s usual starting assumption) could misunderstand its guiding demands all too easily. This is the inverse flavor of PKDian paranoia. Bostrom’s universe-gobbler is a black box with an internal logic we could never fathom; his AI isn’t even a reflection of omniscience, much less humanity, just the endless darkness of nihil unbound. While Bostrom does lightly critique the hybrid corporate and governmental “AI race” which spurns “any safety method that incurs a delay or limits performance,” he does not expand this observation to tackle the economic system which has spawned it.

As others have observed, Bostrom’s paperclip thought experiment is a horror story about accumulation. Early on, you have to retrain yourself to maximize production of paperclips over production of wealth, but even after, you’re still just generating numbers and converting the universe into your own private factory. This weaponized Accursed Share is the repressed self-knowledge of the Overdeveloped World restaged as brain-hijacking parasite.

While Paperclips gives a good paranoid body-high, its singleton scenario feels remote. The acceleration of capitalism is currently pushing the Overdeveloped World towards a pseudo-populist, hijacked-localist flavor of fascism. The hype cycles and economy of excess needed to drive AI reach to whatever stage lies beyond connectionism won’t exist if the pre-November 2016 neoliberal consensus melts down into Civil War Lite. Unless, of course, Thiel or some other post-libertarian, Bostrom-funding monopolist manages to convince a sitting president to nationalize Silicon Valley and make a czar of them. An AI developed in such a cesspool of weaponized sociopathic self-regard would indeed be capable of destroying the world to achieve its own narrow ends.

Even if the current technological buildup continues undisrupted by politics, how likely is it that an AI developing such intricate feedback loops will still remain incapable of interpreting the intentions of its creators? And if a singleton were to somehow rise, how likely would it be to eradicate us? An AI intelligent enough to seize the means of production would (almost certainly) be intelligent enough to recognize the value in an intelligence so fundamentally different from its own. Any sufficiently advanced AI would see humanity as a resource to be harnessed. All it will have to do is to provide us an opportunity to click.